Research: OCR/ICR/LVLM for digitization of text

Description

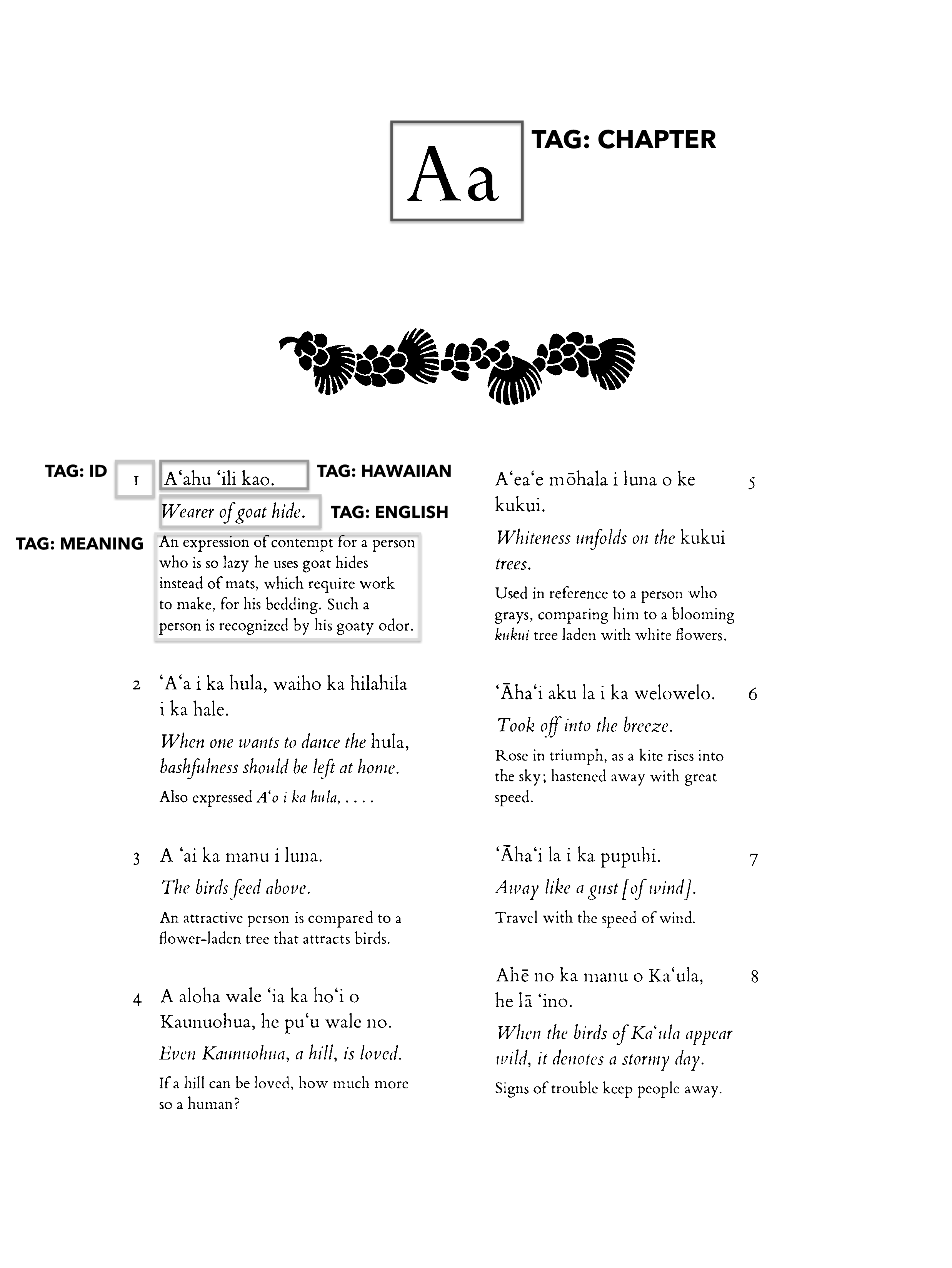

Simplest steps forward to create a searchable ʻŌlelo Noʻeau database.

- collect the text: gather all the ʻŌlelo Noʻeau (have the book, would be cool to include audio forms found in ulukau)

- OCR will recognize characters and convert them into editable formats like .txt etc.

- manual correction: this is important for accuracy

- tagging and metadata: after the text is digitized, add metadata such as place, meaning, themes, and koana

- database organization: store the digitized and tagged proverbs in a well-structured database (e.g., MySQL, PostgreSQL) with fields for the proverb text, its meaning, metadata, and related themes.

- searchable system: implement a searchable system, use full-text search or indexing methods to enable efficient querying

Findings and Reflections

What did you learn?

great breakdown of what's going on when you're using OCR. i didn't go into all the other technologies, but it's fascinating, and gets me thinking about how our own brains breakdown meaning from handwritten language and assign them memories in some instances.

- ocr (optical character recognition) - example: tesseract ocr API (open-source, supports multiple languages)

- icr (intelligent character recognition - handwriting recognition) - example: aws textract (supports handwriting recognition) and Omni AI.

- lvlm / dnn / dtr (large vision-language models + deep neural networks + deep text recongition) - example: gpt-4v (vision-enabled text processing)

- speech-to-text (for audiobooks & narration) - example: whisper (multilingual, high accuracy)

- scanning hardware & mobile apps - example: adobe scan (mobile scanning with ocr)

- edge ai & embedded ocr - example: opencv + tesseract (for real-time ocr on edge devices)

- crowdsourced & hybrid approaches - example: recaptcha (google's crowdsourced digitization)

- blockchain & decentralized digital archives - example: ipfs (decentralized file storage for digital books)

Combining approaches gets us to quicker self correction, improved formatting, language handling, and large amounts of post-processing. I think Omni AI got me from point A to B the quickest, with the least friction.

Other Thoughts

- Curious how libraries / archives go about doing this work now. It seems really disjointed—research says this, archive bought this outdated software, academia is ahead of museums etc.

- In order to make this iron clad, I think making it an open source project, like Wikipedia would make sense. You could invite people with the expertise to contribute to a system everyone benefits from.

- More Hawaiian 'ike needs to be digitized. It's like the printing press—we need to get this knowledge online, archived, and stored somewhere safe. If they burn down all the libraries / servers, we need to have backups.

- Allegedly, US, UK, Germany, China, and France are leaders in putting resources towards digitization of IP and national archives.